There has been a recent wave in the development of consumer-facing offering generative AI technology. With the early and increasing adaption of this technology, it is set to revolutionize the future of content creation, and dictate how we interact and collaborate with others.

Tools such as ChatGPT, GitHub Copilot, DALL-E, and Google Bard have gained massive popularity over the past few months. They offer useful functionalities such as writing assistant to writing email subjects, chatbots, art generation and code completion as well generation. These functionalities in addition to their ease to use have allowed faster content creation and also equipped developers to build faster and effectively.

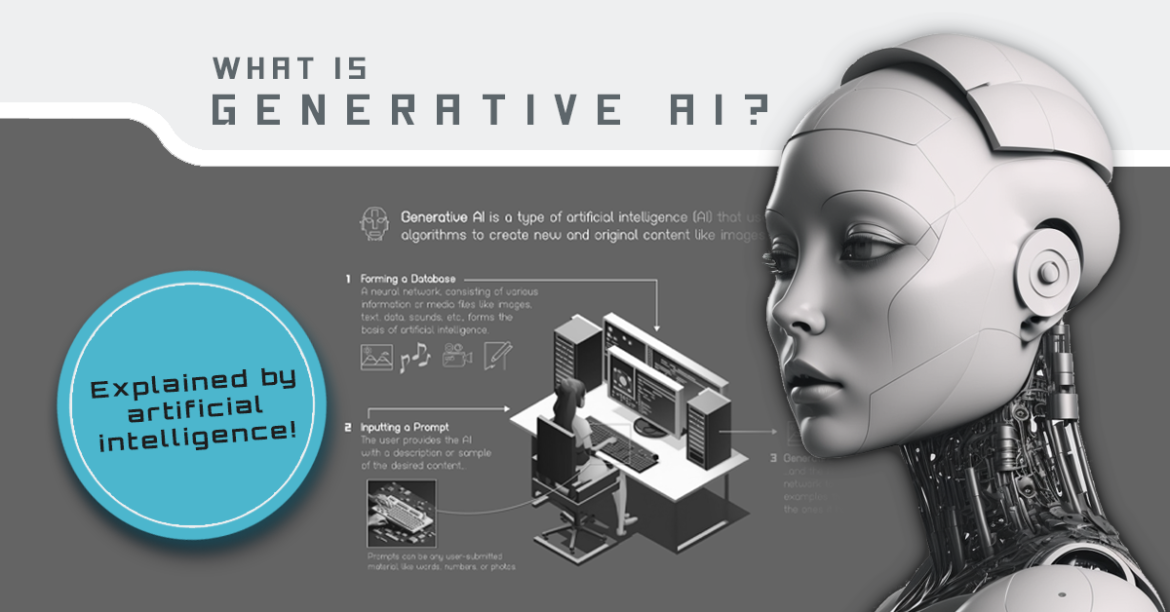

In order to understand why data protection to users is crucial, we need to get a glimpse of how generative AI Works.

How does Generative AI work?

Generative AI works by leveraging neural networks to better understand statistical patterns and structures within existing data to generate new content.

There have been continuous breakthroughs in the field of machine learning which has led to the advancement in generative AI models; Breakthroughs such as Reinforcement Learning by Human Feedback (RLHF) in large language models (LLMs) have led to improved performance in LLM-based solutions. By using supervised and un-supervised learning techniques generative models can train on large amounts of data.

According to Nvidia in a blog, a successful generative AI model must meet three requirements:

- Quality – the generative model should deliver high-quality output based on the user’ input.

- Diversity / Unbias – the output of the model should also reflect lower modes of distribution without reducing the quality of generated output. This, should help reduce unbias output.

- Speed – output generated by the model should be real-time.

For a good generative AI model to work, there must be high volumes of quality data to train on. Generative AI also relies on user inputs for continuous learning.

While they offer numerous advantages when used correctly, there remains a question of how much data of user data is collected and processed. Considering how much control over your data you have when using these platforms is crucial.

Here are some measures you should take in protecting your data and help you gain some control over your data:

Always Review The Privacy Policy

As with any online product, it is important to take a look at the terms and conditions of the application.

The terms and conditions give information on what the application will collect, how it will be used, user rights, whether your personal information will be shared with third parties, security and retention and termination of account.

The application’s privacy policy allows one to understand what rights they are signing over and thus should be thoroughly considered before using the application.

Customize User Settings

Some generative AI tools offer flexible settings that can be adjusted to suit the user’s preference.

In an updated privacy policy, OpenAI’s ChatGPT has improved its privacy controls. Users are now able to access the privacy policy before registration and in addition, it has guaranteed the “right to erasure” to its users. This is a legal right that allows users to request their records to be deleted by an organization.

Google Bard also allows you to delete and manage your data when using the chatbot.

These customized settings can help you gain back some control over your data when using generative AI tools such as ChatGPT and Google Bard.

Diligence on What You Share

One should be careful not to disclose any Personally Identifiable Information PII when using a generative AI model.

Generative AI models pose a critical privacy risk to individuals and organizations with concern over the safety of personal information used to train LLMs.

Research has shown that recovery of user data used to train a large language model is possible. Information recovered included PII (names, emails and addresses) as well Internet Relay Chat (IRC) conversations.

Earlier this year, ChatGPT was taken offline for emergency maintenance due to a bug in an open-source library which led to the chatbot’s outage. The bug allowed a user to recall other users’ chat history titles.

Using Secure, Trusted and Reputable Services

Not all generative AI tools currently available are trustworthy and some bad actors can take advantage of the hype and demand for these tools to exploit unsuspecting users.

It is important to use only trusted and reputable generative AI platforms to prevent being at risk of data theft or malware infection. User Reviews can be a great way to find the right generative AI services based on your preference and needs.

It is also important to ensure that the services use an encrypted connection between your device and their server. As a result, ensuring data transmission is safe from cyber threats.

With the recent paradigm shift in content generation mainly attributed to the rise in the use of generative AI, it is necessary to take huge steps in protecting one’s privacy. Despite the recent progress in Data and AI ethics, there is still a lot to be done.

It is up to individuals, organizations and the overall community to prioritize best privacy practices while using generative AI models.